AI Summary

This AI-generated content is derived from the source article.

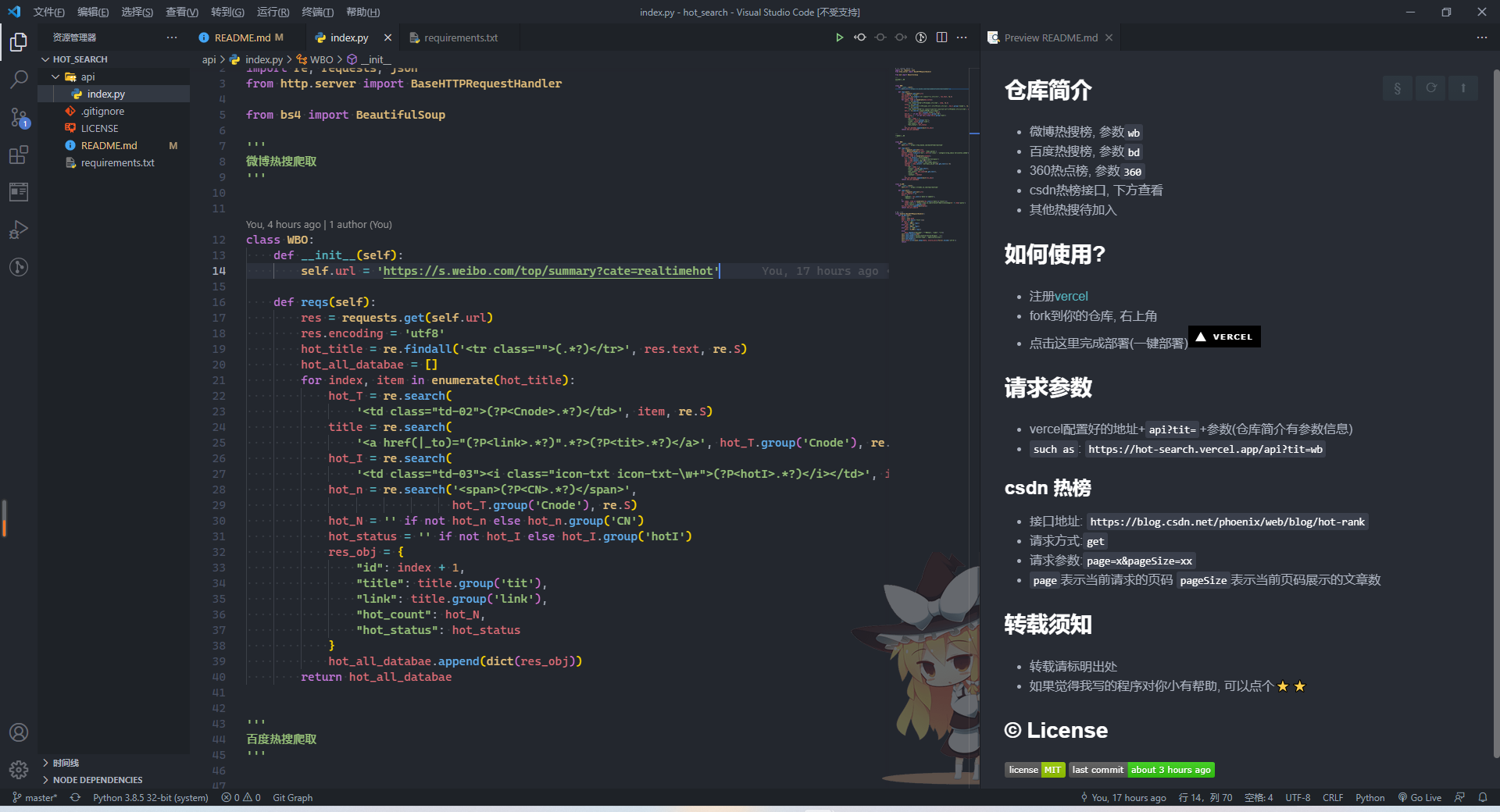

The project showcases web s

Project Code Display #

- Project deployment method has been uploaded to GitHub. Project address: GitHub Address

Technologies Used #

- Python regular expressions

- BeautifulSoup4 library

- XPath parsing

Regular Expressions #

- Similar to JavaScript matching methods

- Import the

repackage before use - Several matching methods: match, search, compile, findall, finditer

re.match(a, b, c) #

- Three parameters: matching rule, string to match, matching mode

- Matches from the first position of the string. If it satisfies,

.span()returns its index position; otherwise, returnsNone result.groups()returns a tuple containing all groups of strings. Usegroup(num)to return a tuple with the corresponding value (starting from 1)

re.search(a, b, c) #

- Also three parameters, same as above

- The method to get tuples is the same. The only difference is that

searchdoes not match from the beginning but returns the first successful match if the string contains the content to match - Note: Only returns one match, not multiple

re.sub(a, b, c, d, e) #

- Performs

replacementoperations a: Pattern string in the regular expressionb: String to replace, can also be a functionc: Original stringd: Maximum number of replacements after matching, default is 0 (replace all matches)e: Matching mode, numeric form

re.compile(a, b) #

- Compiles a regular expression for use by the match and search functions

- If using the match method, the group method can omit the parameter or use 0

- The group method’s parameter value depends on the number of tuples in your regular expression

start,end, andspanmethods return the index position of the matched character in the original string

findall(a, b, c, d) #

- Parameters: regular expression, string to match, start position, end position

- Returns all substrings that meet the conditions in a list. If none, returns an empty list

- If there are tuples, returns characters that meet the tuple rules, which can be iterated

finditer(a, b, c) #

- Parameters: matching rule, string to match, matching mode

- Similar to

findall, but returns an iterator that can be used withfor in

re.split(a, b, c, d) #

- Splits the matching string into a list based on the matching rule

- Parameters: matching rule, string to split,

number of splits (default is 0, unlimited), matching mode

Regular Expression Modifiers #

re.I: Case-insensitive matchingre.L: Locale-aware matchingre.S: Makes.match all characters, including newlinesre.M: Multi-line matching, affects^and$re.U: Parses characters based on the Unicode character set. Affects\w,\W,\b,\Bre.X: Allows more flexible formatting for better readability of regular expressions

Python Regular Expression Detailed Explanation (Super Detailed, Must Learn!)

XPath Method #

- Install the

lxmllibrary first:pip install lxml - XPath uses path expressions to navigate XML documents

- Can parse local HTML files or directly parse HTML strings

Common XPath Rules #

nodename: Selects all child nodes/: Selects child nodes of the current node//: Selects descendants of the current node.: Selects the current node..: Selects the parent node of the current node@: Selects attributes

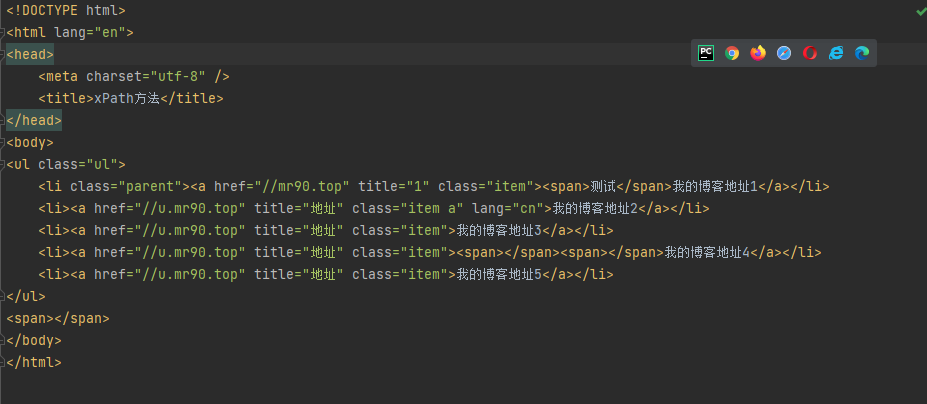

Local Display #

- First method: Use

etree.parseto parse locally

# coding= utf-8

from lxml import etree

html = etree.parse('./index.html', etree.HTMLParser())

print(etree.tostring(html))- Second method: Use

etree.HTML

# coding= utf-8

from lxml import etree

fp = open('./index.html', 'rb')

html = fp.read().decode('utf-8')

selector = etree.HTML(html) # etree.HTML(source) recognizes it as an object that can be parsed by XPath

print(selector)- Match all nodes using the

//*rule - Match all specified nodes using

//node_name - Match all child nodes by replacing

//with/ - Get parent node attribute values using

../@attribute_name - Attribute matching can use the

@attribute_namemethod - Text retrieval has two methods:

/text()and//text(). The first directly retrieves text, while the second retrieves special characters generated by line breaks - Retrieve attributes using

/@href - For attributes containing multiple values, use the

contains()method - Multi-attribute matching uses the

andoperator with thecontains()method

XPath Operators #

- Division and modulo are special; others are the same as basic operators

- Division uses

div, e.g.,8 div 4 - Modulo uses

mod, e.g.,1 mod 2 - Also,

andandorrepresent conjunction and disjunction

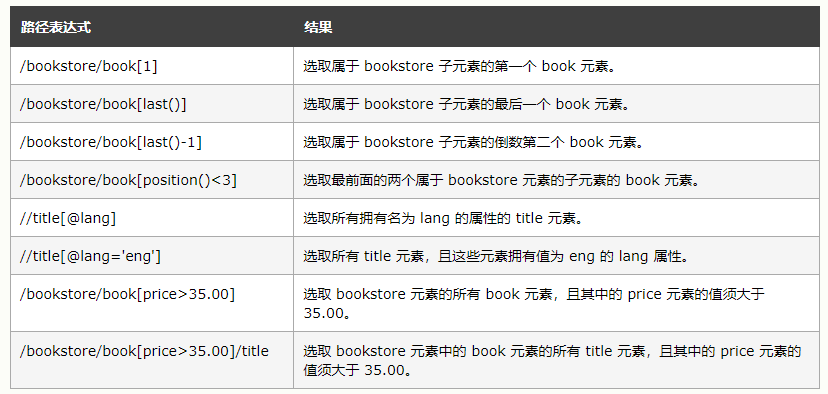

Sequential Selection #

- XPath has over 100 built-in functions. Refer to XPath Functions for details

Node Axis Selection #

- Get the

hrefattribute value of allanodes under the current node:child::a/@href - Get the attribute value of the specified element of the current node:

attribute::attribute_name - Get all child elements of the current node:

child::* - Get the attribute values of all attributes of the current node:

attribute::* - Get all child nodes of the current node:

child::node() - Get all text child nodes of the current element:

child::text() - Get all ancestor

lielements of the current element (including the current element):ancestor-or-self::element

XPath AxesXPath Pitfalls Guide

Demo Code #

# coding= utf-8

from lxml import etree

# fp = open('./index.html', 'rb')

# html = fp.read().decode('utf-8') #.decode('gbk')

# selector = etree.HTML(html) #etree.HTML(source) recognizes it as an object that can be parsed by XPath

# print(selector)

html = etree.parse('./index.html',etree.HTMLParser())

# print(etree.tostring(html).decode('utf-8'))

all_node = html.xpath('//*') # Get all nodes: //*

part_node = html.xpath('//li') # Get part of the nodes: //node_name

child_node = html.xpath('//li/a') # Match child nodes

parent_node = html.xpath('//a[@href="//ryanuo.cc"]/../@class') # Get parent node attribute value: ../@attribute_name

attrs_node = html.xpath('//a[contains(@class,"a")]/text()') # Match attributes containing multiple values: contains() method

# Sequential retrieval

first_node = html.xpath('//li[1]/a/text()') # Get the first node

last_node = html.xpath('//li[last()]//text()') # Get the last node

front_node = html.xpath('//li[position()<3]//text()') # Get the first two nodes

end_ndoe = html.xpath('//li[last()-2]//text()') # Get the third-to-last node

# Axis nodes

child_node_z = html.xpath('//li[position()<2]/child::a/@href') # Get the `href` attribute value of all `a` nodes under the current node

attribute_node = html.xpath('//li[2]//attribute::lang') # Get the attribute value of the specified element of the current node

all_child_node = html.xpath('//ul/li[last()-1]//child::*') # Get all child elements of the current node

all_attrs_node = html.xpath('//li[1]/a/attribute::*') # Get the attribute values of all attributes of the current node

all_child_text_node = html.xpath('//li[1]//child::text()') # Get all text child nodes of the current element

all_child_node_node = html.xpath('//li[1]/a/child::node()') # Get all child nodes of the current node

ancestor_self = html.xpath('//a[@title="1"]/../ancestor-or-self::li') # Get all ancestor `li` elements of the current element (including the current element)

print(ancestor_self)BeautifulSoup4 Usage #

Beautiful Soupautomatically converts input documents to Unicode and output documents to UTF-8- Install using

pip install beautifulsoup4 - Import using

from bs4 import BeautifulSoup

Getting Content #

- Tags have two important attributes:

nameandattrs - There are three methods to get text content:

.stringmethod returns an iterator.textmethod returns node text.get_text()method returns node text

## Get title object

print(soup.title) # <title>XPath Method</title>

# Get title content

print(soup.title.string) # Returns an iterator

print(soup.title.text)

print(soup.title.get_text())

print(soup.find('title').get_text())- Get objects through parent-child relationships

# print(soup.title.parent) # Returns parent node including content

print(soup.li.child) # Node

print(soup.li.children) # Returns an iteratorGet the first li tag #

print(soup.li.get_text()) # Matches the first one, returns all node text information

print(soup.find('li').text)

# Get `ul` child tags (empty lines are also considered children)

print(soup.ul.children)

for index, item in enumerate(soup.ul.children):

print(index, item)Get element attributes #

- Use

.attribute_namemethod, but can only get one - Use

element.attrs['attribute_name']method to return a list - If using

soup.elementtwice, the first time gets the first matched element, the second time gets the second matched element

Get multiple elements #

findmethod gets one elementfind_allgets multiple elements, can uselimitto limit the number,recursive = Trueto find descendants;recursive = Falseto find children- Multi-level search:

find_allreturns a list that can be iterated and used withfindorfind_allagain to get elements

Get objects by specified attributes #

idandclassselectors.classis special because it’s a keyword. Useclass_instead

print(soup.find(id='a'))

print(soup.find('a', id='a'))

print(soup.find_all('a', id='a')) # Can use index to query

# `class` is a keyword, use `class_`

print('class1', soup.find_all('a', class_='a'))

print('class2', soup.find_all('a', attrs={'class': 'item'})) # More general

print('class3', soup.find_all('a', attrs={'class': 'item', 'id': 'a'})) # Multiple conditionsUse functions as parameters to return elements #

def judgeTitle1(t):

if t == 'a':

return True

print(soup.find_all(class_=judgeTitle1))- Judge by length

# Judge by length

import re # Regular expression

reg = re.compile("item")

def judgeTitle2(t):

# Return `t` parameter with length 5 and containing 'item'

return len(str(t)) == 5 and bool(re.search(reg, t))

print(soup.find_all(class_=judgeTitle2))Use CSS selectors #

selectmethod returns a list- Can search by tag name, attribute, tag + class + id, combination

Usage of BeautifulSoup Library in PythonDetailed Usage of BeautifulSoup Library in PythonPython Web Scraping: Extract Text with BeautifulSoup